Shaping ideas into visionOS apps

Juan Moya

Helping startups and product teams turn complex XR ideas into intuitive spatial computing experiences for Apple Vision Pro, from early prototypes to App Store launch.

Free 30-minute project assessment

Juan Moya

Helping startups and product teams turn complex XR ideas into intuitive spatial computing experiences for Apple Vision Pro, from early prototypes to App Store launch.

Free 30-minute project assessment

Great spatial computing isn't just about placing 3D models in a room. It's about how those objects feel and behave. It’s about intuitive experiences that solve real problems.

Our approach begins by challenging ideas to discover which features truly deliver value as an XR experience. We dive deep into device capabilities — hand tracking, object persistence, spatial audio, surrounding awareness — because this is where the magic of immersive experiences happens. Rapid prototyping lets us refine these interactions in short, focused iterations.

At every step, we aim to create unforgettable moments for users.

Custom gestures that feel intuitive and magical.

Designing custom UI with precise visual and spatial audio cues to give users confidence.

Experiences that feel like part of the real world, with surrounding awareness

A mix of independent ventures and technical partnerships.

Bring your smart home to life on Vision Pro

Passionate about home automation, I created Homerise to offer an intuitive and immersive way to control the smart home. Early on, the iOS app introduced augmented reality controls that let users place devices in their space and interact with them simply by pointing their iPhone. With the release of Apple Vision Pro, Homerise became a natural evolution: even before visionOS 26 was announced, it featured spatial interactions such as widget anchoring with surface tracking, wall occlusion, and proximity-based activation. Furthermore, Wrist Control with room detection delivers a seamless experience where lifting your wrist instantly reveals a control panel contextualized to the room you’re in.

Restoring voice and autonomy through AI and XR

Born from a personal family experience, Fabio (CEO) set out to help people living with Amyotrophic Lateral Sclerosis (ALS) continue communicating with their loved ones after losing the ability to speak. He approached me to transform this vision into a visionOS app. The application enables face-to-face conversations by listening to and transcribing the other person’s voice, then using AI to generate possible replies; once selected, the app speaks the response aloud using the user’s cloned voice. I am leading the app’s development on visionOS, and my experience with Apple Vision Pro has been instrumental in shaping an ergonomic, accessible design that pushes the platform’s capabilities for motion-impaired users.

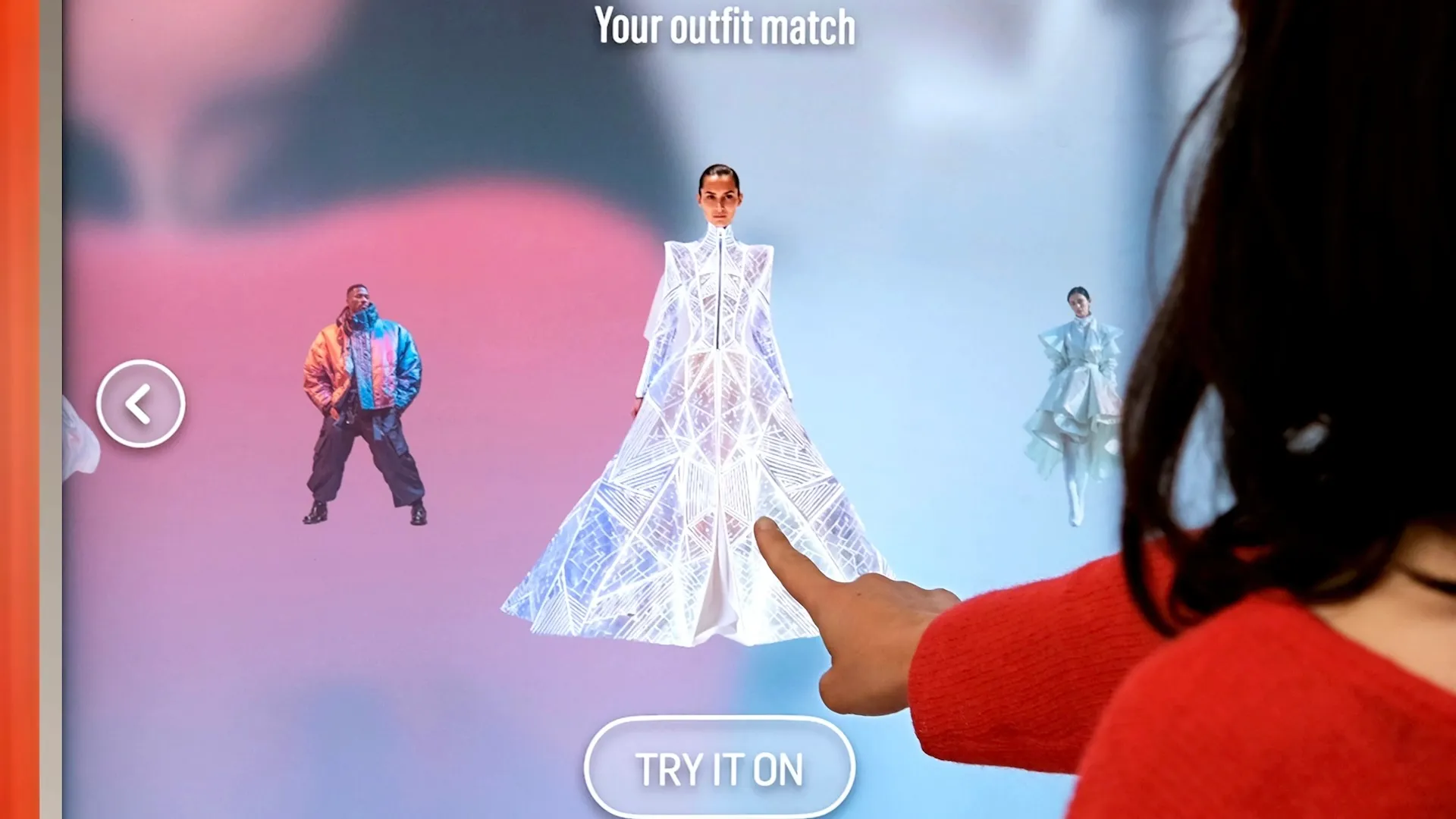

Interactive AR Mirrors / AI Photo booth

In collaboration with Atomic Digital Design, I contributed to the iOS development of multiple AR Mirror experiences using Snap CameraKit filters, as well as an AI-powered photo booth. These installations were designed for high-traffic retail and event environments, including Orange Festivals, Westfield, Armani Beauty, F1 Austin Vegas, and Patins en Folie. I was responsible for managing the full lifecycle of each AR experience through to App Store publication. Project requirements varied widely, ranging from simple photo delivery via email to on-site printing through IPPS and integrated payments using SumUp terminals.

Sharing knowledge to push the platform forward.

Spatial Computing opens up amazing possibilities but it also introduces unique UX challenges. In this session we’ll explore how visual and audio feedback play a crucial role in creating rich mixed reality experiences on Vision Pro: we’ll take an iOS custom slider as example and adapt it for visionOS.

Watch talkWhether you need a dedicated visionOS expert to join your team or a full-cycle developer to bring an app idea to life, I am ready to help.

Free 30-minute session

For any questions